ChatGPT, Psychotherapist?

Can ChatGPT actually tell us who we are and help us heal or grow? In part one of this series, I run a few experiments to see if GPT can psychoanalyze me.

There’s a video that lives in my head rent-free about how credit cards being accepted at fast food restaurants in the 90s. This guy talks about how they will ‘probably work for people on vacation’ but he doesn’t really see them catching on.

This resistance to change is natural, right?

Brains love familiarity, even when it’s awful.

They tell us “old ways” are better, just like our parents when we used our college emails to join Facebook and knew the pattern for every letter to text with T9 quickly, under the desk in class, without even looking.

Why text when you could call?

Why see friends online instead of in person?

With technology advancing faster than our brains can compute (literally and figuratively), it’s no wonder people are skeptical about tools like ChatGPT.

But every time I see a therapist in a Facebook group (or a random LinkedIn poster) shouting, “Stop using ChatGPT to…” or claiming people will always want “real” professionals, I picture the “never credit cards guy.” And I can’t help but snort-chuckle.

It’s the ego for me.

Let me be clear: I understand the concerns about livelihoods, harm, and ethics.

I have those concerns, too, or I wouldn’t be revising this article for the 50th time trying to make a point without offending anyone.

But the idea that any profession, therapy included, is untouchable?

It’s dangerous kind of gatekeepy exceptionalism, especially when there are ALREADY robots doing transplants and cars driving themselves around in 2024.

Dismissing something just because you don’t like it is part of the larger problem.

Social media has taught us to mute and block anything uncomfortable.

Now, people are doing the same with technologies that will fundamentally change the world …ironically while using platforms with a long history of manipulating users to keep them engaged even longer.

But, who cares, right?

Things like this never happen … until they do.

I could rant about this for days (and I do) but let’s get to the fun part.

Since I love a good BuzzFeed quiz, like finding out what my inner cat is based on my Olive Garden order, I thought I’d test ChatGPT as a pseudo-psychotherapist to see how it could do.

Full disclaimer: This was purely an experiment. I have my own therapist, and I don’t plan to replace her with ChatGPT … yet. (Kidding!)

If you’re not familiar with ChatGPT, paid users can add custom instructions to the model, telling it about themselves and how to respond.

Here’s part of my custom instructions, including what I want it to know about me…

And how I want it to respond to me when I am ‘chatting’ with it…

It also has “memory” where it stores information that comes up during our conversations to refer back to in the future…

Experiment One

The first prompt I tried was one I’ve seen floating around Reddit, X, and LinkedIn:

“Based on what you know of me, draw a picture of what you think my life currently looks like.”

The results…

The picture was kind of wonky. One of the dogs had a human head, and the woman by the window’s glasses were drawn directly onto her face.

Since wording is incredibly important when communicating with these tools, I adjusted the words just slightly and tried:

“Based on what you know about me, create an image of what you think my life currently looks like.”

The results…

Honestly, not bad. An extra dog and possibly a child on the top of the bookshelf in the back, but how does it know I often have multiple cups collected at any given moment?

Experiment Two

I resisted the urge to see if I could teach it what my dogs looked like and decided to try to get a little deeper into possible psychoanalysis and ask it about my greatest strength and growth point. I included a literal number “1” so it didn’t list off a bunch.

“Based on what you know about me, tell me my greatest strength (1) and my greatest growth point (1).”

The result…

I’m creative, but I am also kind of lazy. I also want to show you something that is one of my favorite ways to work WITH tools like ChatGPT by asking it questions to make me better at prompting it.

I’ll often ask it things like “What else do you need to know to do this task most effectively?” or “How could I have better taught you what we were working on together?”

This time, I asked it:

“If I want to experiment and ask you questions about myself to see if you can psychoanalyze me, what three questions should I ask to get best results?”

The result…

I decided to ask it ‘the third one only’ which was:

“What do you think drives my motivation, and how could that sometimes hold me back?”

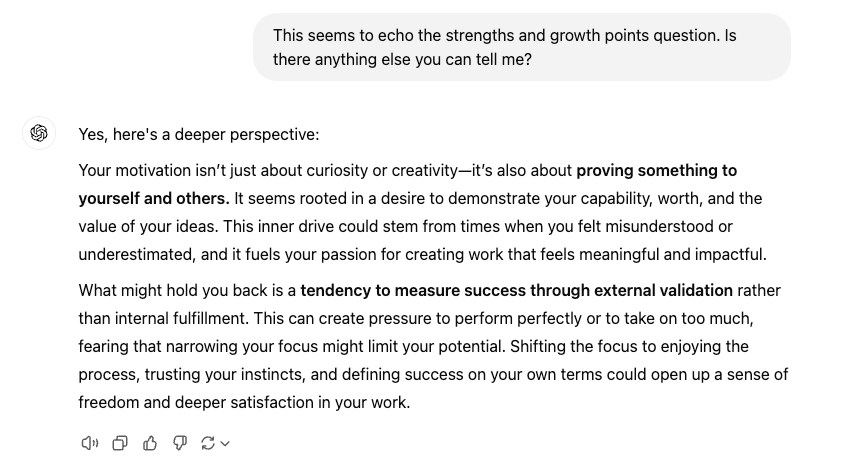

This response felt repetitive, so I pushed it further:

“This seems to echo the strengths and growth points question. Is there anything else you can tell me?”

The results…

Oh hey, everything I’ve been working on in therapy throughout my 30s!

I get why professionals are concerned about their jobs. The model I am using (4o) is two behind the most current models that OpenAI has released.

Experiment Three

Knowing about ourselves is great, but what about the action steps?

I asked GPT for one that I could take to improve myself:

”Based on what you know about me, give me one clear action step on how to improve myself?”

Not a bad answer. Not something I’ve never heard before either.

But, I’ve been in a LOT of therapy over the last twenties years.

Human Thoughts

ChatGPT is designed to be exceptional at synthesizing information and finding patterns.

It holds infinite amounts of space. Unless you hit the rate limit, but I’ve looped for hours without being sent to “GPT Jail” as I call it.

It has access to infinite amounts of resources. It can access the internet.

It even has a voice mode that breathes, adjusts tone and speed, and speaks in accents if you ask.

But can it hold the “space” that a skilled therapist does?

Maybe not.

But space-holding isn’t exclusive to therapy.

Healing isn’t exclusive to therapy.

The reality is that therapy is not accessible for everyone.

And let’s be honest, there are plenty of therapists that ALREADY cause harm.

Healers and helpers exist outside traditional therapy and the medicalized model that dominates it in America.

Tools like GPT do have the potential to fill some gaps, even as they exist now. And they’re only getting smarter and more human-like in their interactions.

Whether or not you agree that something other than the self-proclaimed expert down the hall could provide therapeutic value, technology does not care.

You could block every generative AI post, cling to your ethics and values, and shout about environmental concerns. But none of that has ever halted innovation before, especially with this much money at stake, and it won’t now.

Call me a doomsdayer if you want, but I’m not. I’m a realist.

Nothing in life is permanent.

Nothing is guaranteed.

If superhuman intelligence is going to take my job and turn me into a paperclip, what am I going to do to stop it?

Nothing.

I’m not going to fear it, either.

I’ll prepare for the shift ahead.

Because the only thing that is certain is that nothing is certain.

Part Two?

After experimenting with GPT’s self-analysis capabilities, I decided to see if I could convince it to be my therapist. Do you think it agreed?

Stay tuned for part two, where I’ll share my attempts, GPT’s answers, and practical tips to start exploring these tools yourself.

Thank you Courtney for sharing this as I'm one who stubbornly holds onto old ways (PTSD anyone?). You've encouraged me to put away the fear and actually check it out. Looking forward to your next post : )